Quickstart

This guide takes ~5 minutes and will walk you through running a containerized application using the Kurtosis CLI. You'll install Kurtosis, deploy an application from a Github locator, inspect your running environment, and then modify the deployed application by passing in a JSON config.

Install Kurtosis

Before you get started, make sure you have:

- Installed Docker and ensure the Docker Daemon is running on your machine (e.g. open Docker Desktop). You can quickly check if Docker is running by running:

docker image lsfrom your terminal to see all your Docker images. - Installed Kurtosis or upgrade Kurtosis to the latest version. You can check if Kurtosis is running using the command:

kurtosis version, which will print your current Kurtosis engine version and CLI version.

This guide will have you writing Kurtosis Starlark. You can optionally install the VSCode plugin to get syntax highlighting, autocomplete, and documentation.

Check out this guide to run your Docker Compose setup with Kurtosis in one line!

Run a basic package from Github

Run the following in your favorite terminal:

kurtosis run github.com/kurtosis-tech/basic-service-package --enclave quickstart

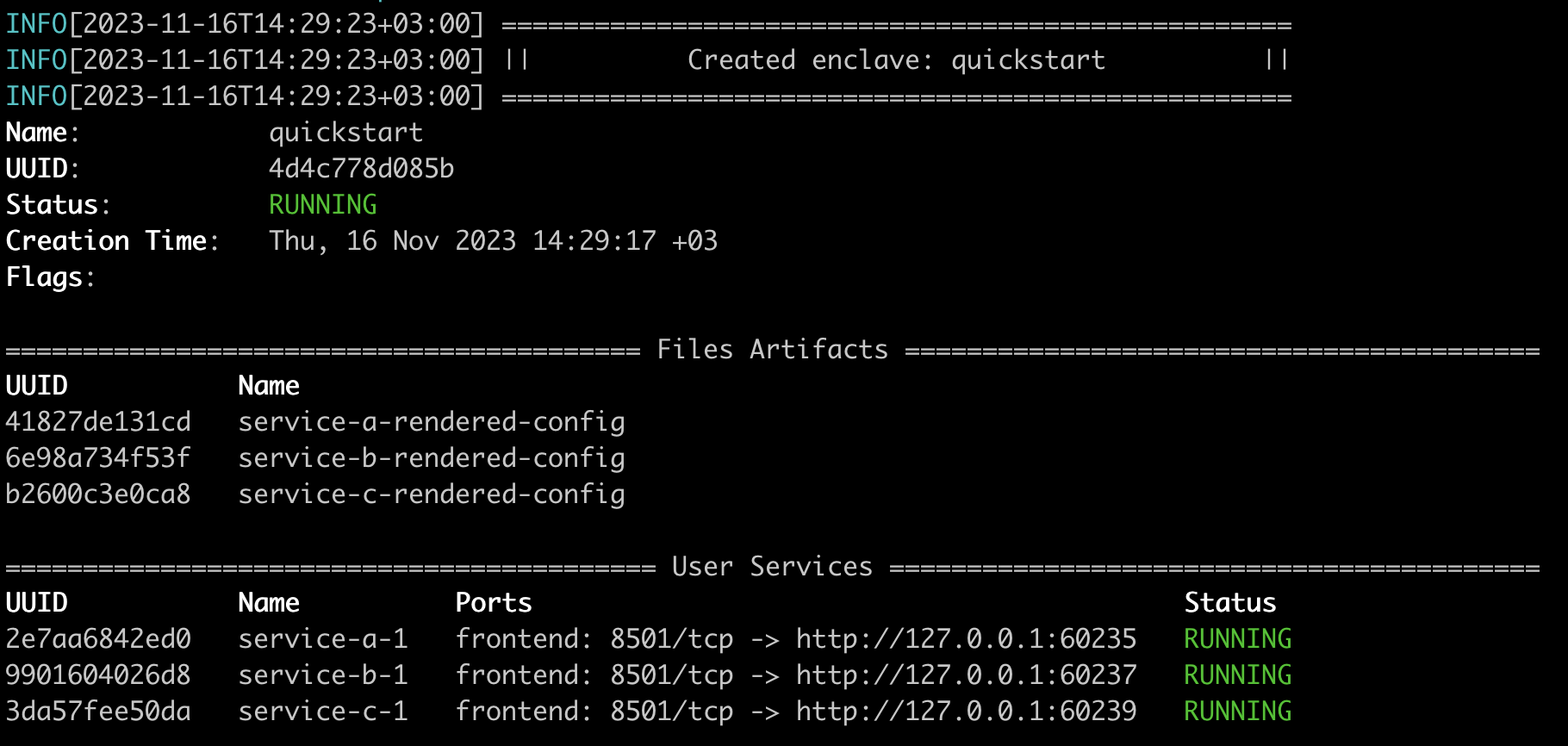

You should get output that looks like:

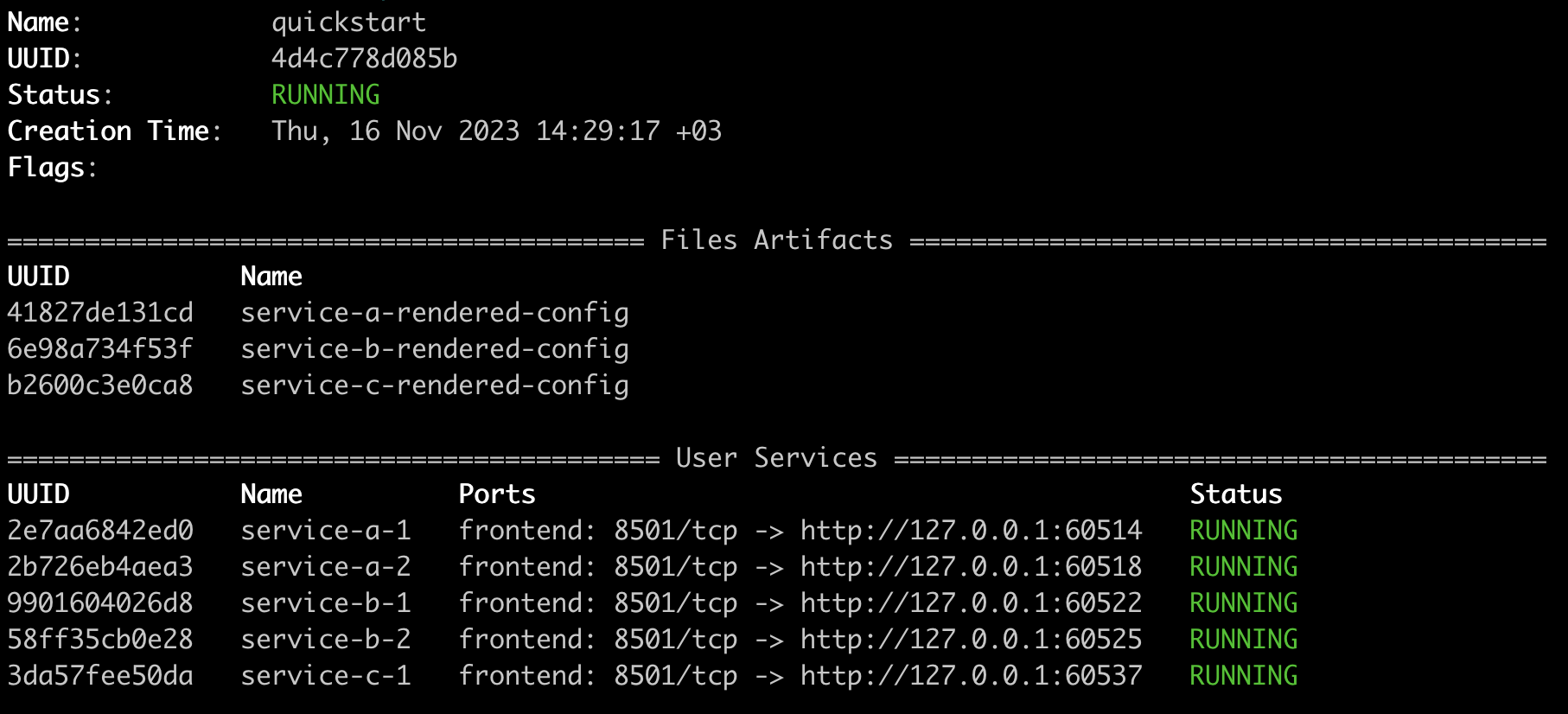

By running this command, you can see the basic concepts of Kurtosis at work:

github.com/kurtosis-tech/basic-service-packageis the package you used, and it contains the logic to spin up your application.- Your application runs in an enclave, which you named

quickstartvia the--enclaveflag. - Your enclave has both services and files artifacts, which contain the dynamically rendered configuration files of each service.

Inspect your deployed application

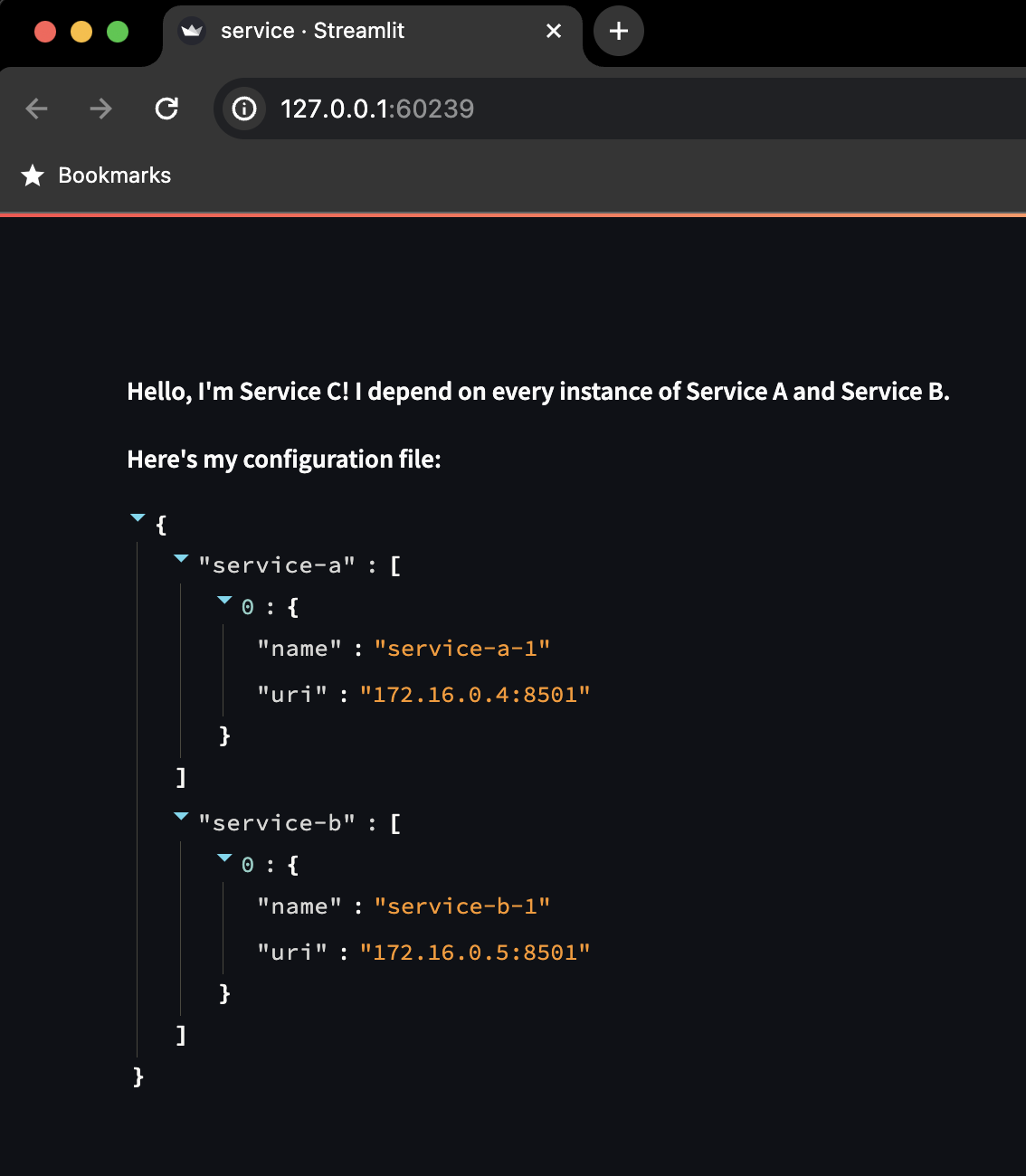

Command-click, or copy-and-paste to your browser, the URL next to the service called service-c-1 in your CLI output. This local port binding is handled automatically by Kurtosis, ensuring no port conflicts happen on your local machine as you work with your environments. You should see a simple frontend:

Service C depends on Service A and Service B, and has a configuration file containing their private IP addresses that it can use to communicate with them. To check that this is true, copy the files artifact containing this config file out of the enclave:

kurtosis files download quickstart service-c-rendered-config

This will put the service-c-rendered-config files artifact on your machine. You can see its contents with:

cat service-c-rendered-config/service-config.json

You should see the rendered config file with the contents:

{

"service-a": [{"name": "service-a-1", "uri": "172.16.12.4:8501"}],

"service-b": [{"name": "service-b-1", "uri": "172.16.12.5:8501"}]

}

In this step, you saw two ways to interact with your enclave:

- Accessing URLs via automatically generated local port bindings

- Transferring files artifacts to your machine, for inspection

More ways to interact with an enclave

You can also do a set of actions you would expect from a standard Docker or Kubernetes deployments, like:

- Shell into a service:

kurtosis service shell quickstart service-c-1 - See a service's logs:

kurtosis service logs quickstart service-c-1 - Execute a command on a service:

kurtosis service exec quickstart service-c-1 'echo hello world'

Modify your deployed application with a JSON config

Kurtosis packages take in JSON parameters, allowing developers to make high-level modifications to their deployed applications. To see how this works, run:

kurtosis run --enclave quickstart github.com/kurtosis-tech/basic-service-package \

'{"service_a_count": 2,

"service_b_count": 2,

"service_c_count": 1,

"party_mode": true}'

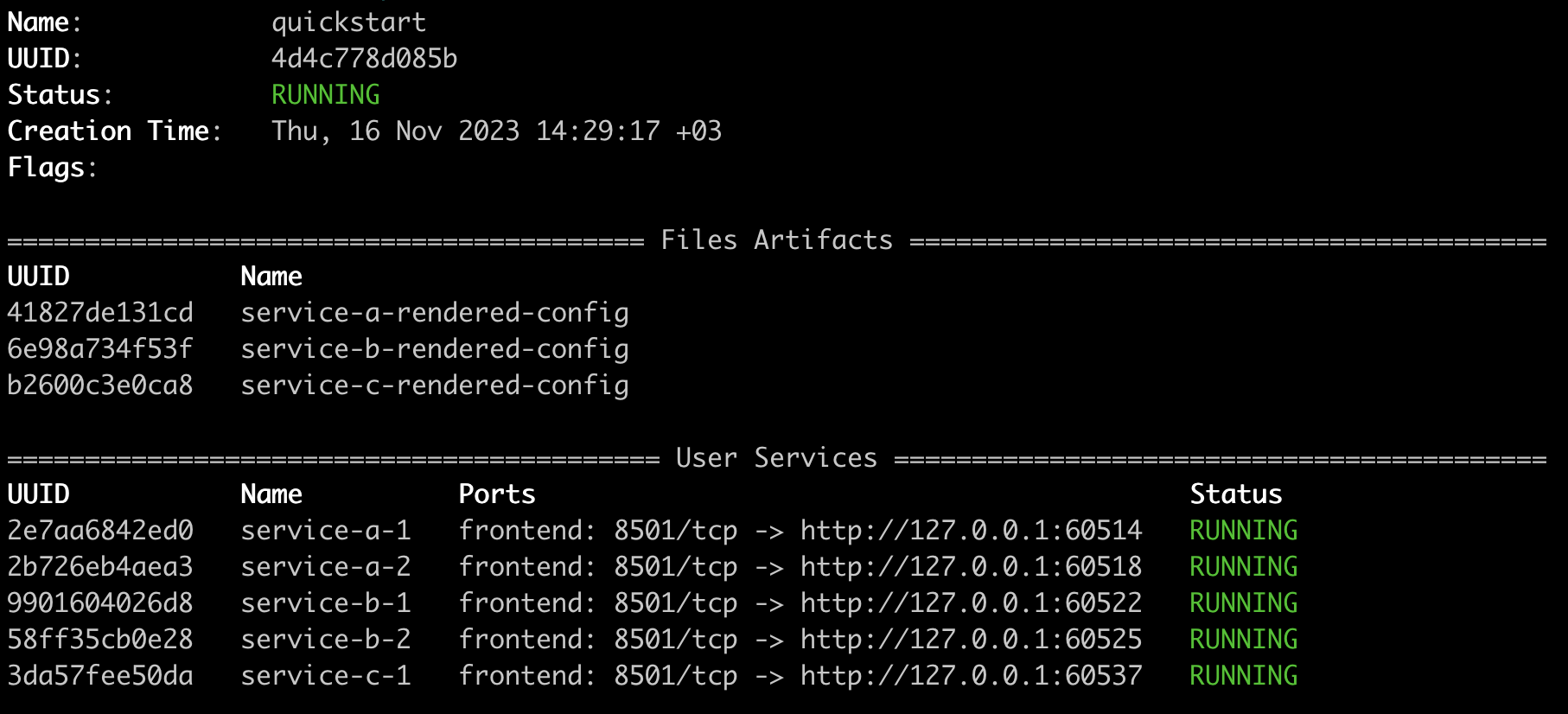

This runs the same application, but with 2 instances of Service A and Service B (perhaps to test high availability), and a feature flag turned on across all three services called party_mode. Your output should look like:

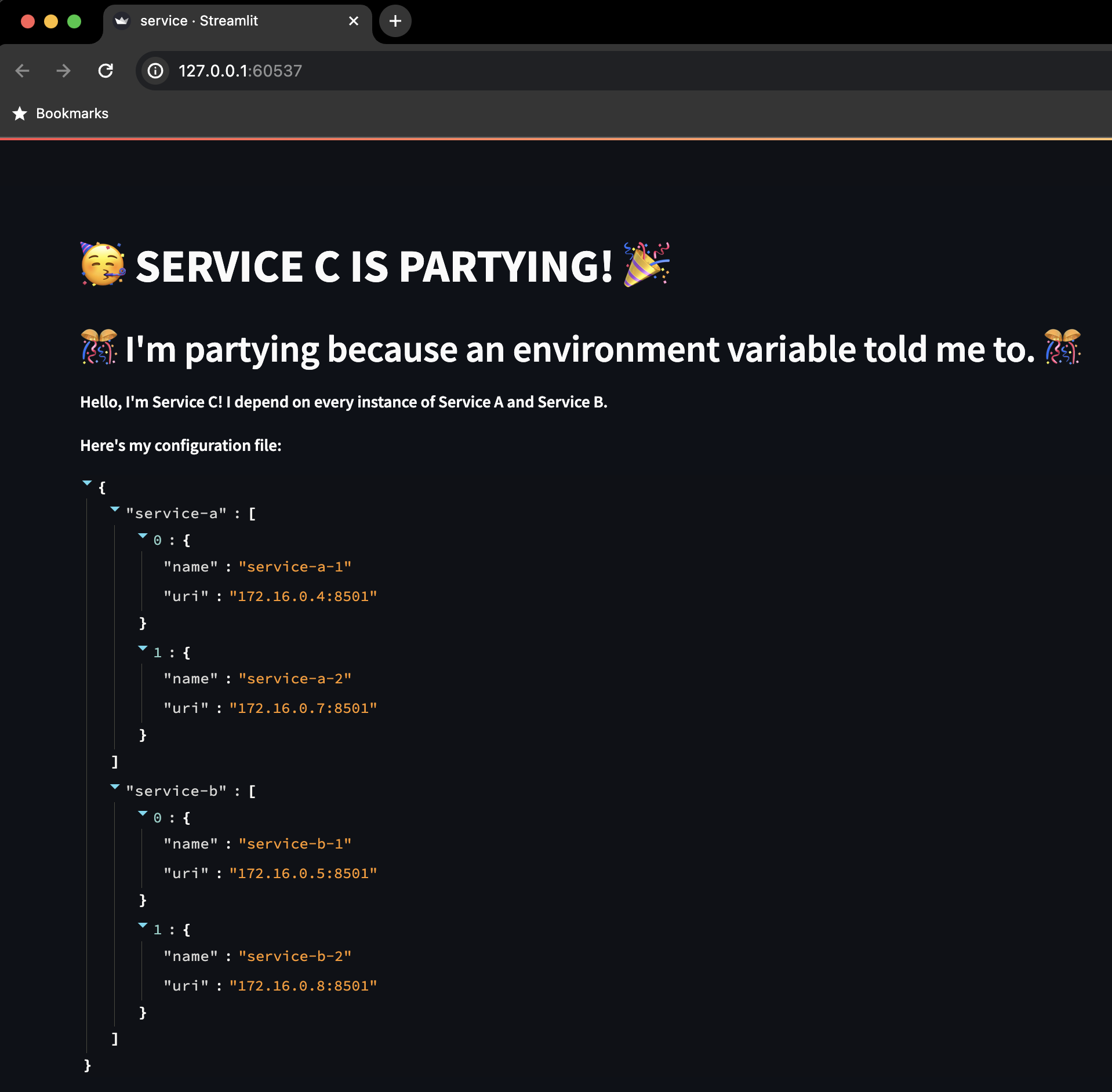

If you go to the URL of any of the services, for example Service C, you will see the feature flag party_mode is enabled:

Each service is partying, but they're each partying for different reasons at the configuration level. By changing the JSON input to the package, you did all of these:

- Changed number of instances of Service A and Service B

- Turned on a feature flag on Service A using its configuration file

- Turned on a feature flag on Service B using a command line flag to its server process

- Turned on a feature flag on Service C using an environment variable on its container

To inspect how each of these changes happened, check out the following:

See that the count of each service changed

You can see 2 instances of Service A and 2 instances of Service B in the CLI output:

You can verify that the configuration file of Service C has been properly changed so it can talk to all 4 of them:

kurtosis files download quickstart service-c-rendered-config

cat service-c-rendered-config/service-config.json

You should see the rendered config file with the contents:

{

"service-a": [{"name": "service-a-1", "uri": "172.16.16.4:8501"},{"name": "service-a-2", "uri": "172.16.16.7:8501"}],

"service-b": [{"name": "service-b-1", "uri": "172.16.16.5:8501"},{"name": "service-b-2", "uri": "172.16.16.8:8501"}]

}

See a feature flag turned on by a configuration file on disk

Service A has the party_mode flagged turned on by virtue of its configuration file. You can see that with by downloading the service-a-rendered-config files artifact, as you've seen before:

kurtosis files download quickstart service-a-rendered-config

cat service-a-rendered-config/service-config.json

You should see the config file contents with the feature flag turned on:

{

"party_mode": true

}

See a feature flag turned on by an command line argument

Service B has the party_mode flag turned on by virtue of a command line flag. To see this, run:

kurtosis service inspect quickstart service-b-1

You should see, in the output, the CMD block indicating that the flag was passed as a command line argument to the server process:

CMD:

--

--party-mode

See a feature flag turned on by an environment variable

Service C has the party_mode flag turned on by virtue of an environment variable. To see the environment variable flag is indeed enabled, run:

kurtosis service inspect quickstart service-c-1

In the output, you will see a block called ENV:. In that block, you should see the environment variable PARTY_MODE: true.

With a JSON (or YAML) interface to packages, developers don't have to dig through low-level docs, or track down the maintainers of Service A, B, or C to learn how to deploy their software in each of these different ways. They just use the arguments of the package to get their environments the way they want them.

Now that you've use the Kurtosis CLI to run a package, inspect the resulting environment, and then modify it by passing in a JSON config, you can take any of these next steps:

- To continue working with Kurtosis by using packages that have already been written, take a look through our code examples.

- To learn how to deploy packages over Kubernetes, instead of over your local Docker engine, take a look at our guide for running Kurtosis over k8s

- To learn how to write your own package, check out our guide on writing your first package.